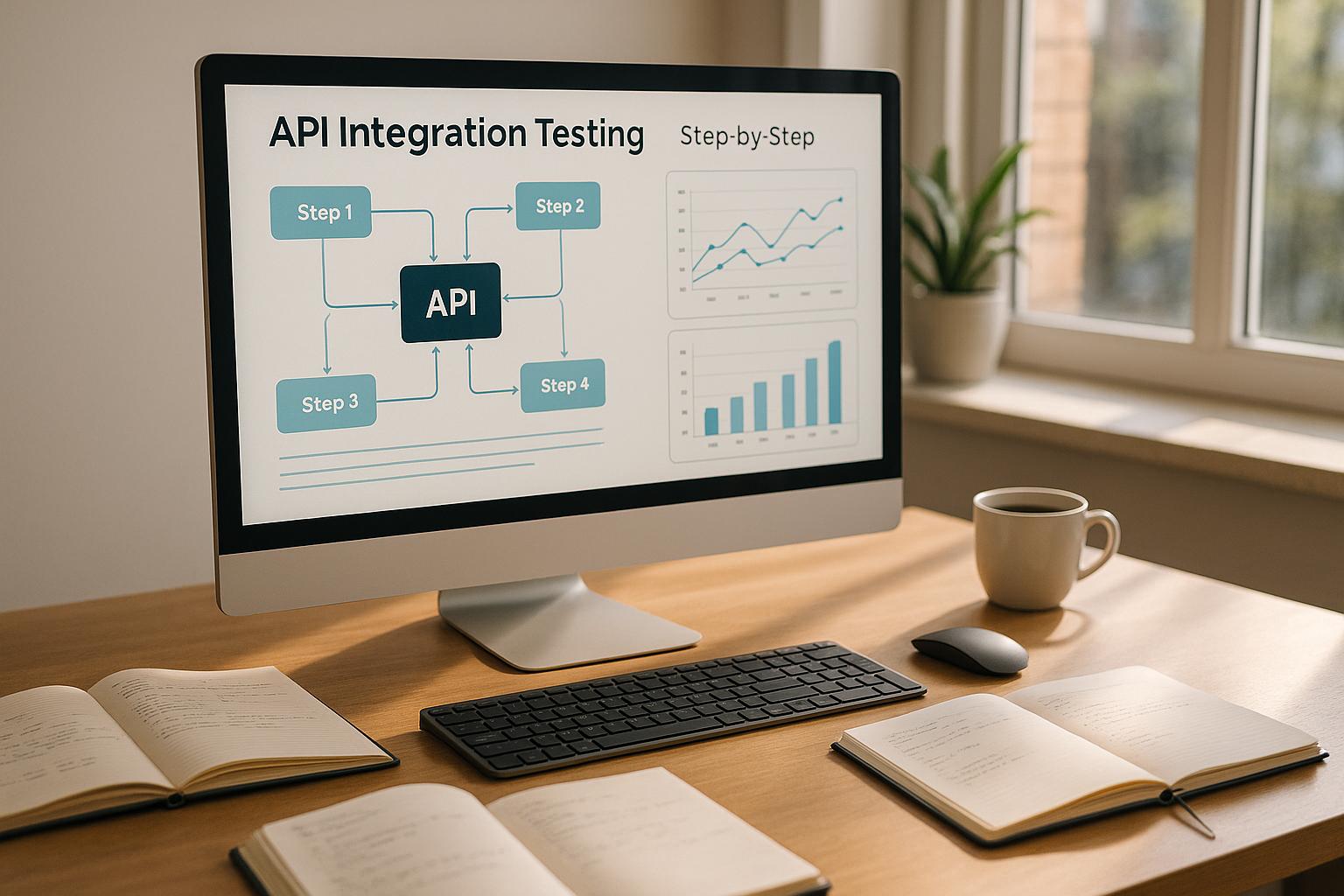

API Integration Testing: Step-by-Step Guide

API integration testing ensures that different software systems communicate effectively, handle data correctly, and perform reliably. It’s critical for avoiding errors like missed leads, corrupted data, or broken workflows. This guide explains how to test APIs, set up a secure testing environment, and validate results.

Key Points:

- What It Is: API integration testing checks how APIs work together, focusing on data exchange and performance under various conditions.

- Why It Matters: Poor integrations can lead to data loss, process failures, or missed business opportunities.

- Scenarios to Test: Form submissions, CRM sync, lead management, customer service tools, and e-commerce workflows.

- Setup: Use test databases, mock servers, and API monitoring tools. Ensure environment isolation and data security.

- Execution: Test authentication, automate processes, simulate errors, and stress-test for high traffic.

- Validation: Check status codes, response times, and data accuracy. Use automated tools to monitor and analyze results.

- Maintenance: Update test scripts, document failures, and monitor APIs continuously to catch issues early.

Example:

A UK software company reduced lead response time to 15 minutes using well-tested API integrations, increasing conversions by 27% in one quarter. This success highlights the importance of thorough testing for business growth.

API integration testing is a must for reliable, efficient systems that handle real-world demands. Follow these steps to ensure your APIs perform as expected.

The only Integration Testing video you need to watch

Setting Up the Test Environment

A solid test environment is like a safety net - it mimics your production setup, safeguards sensitive data, and helps catch integration issues before they ripple out to real users or disrupt business operations. It's all about creating a controlled space where you can confidently test without unintended consequences.

Test Environment Components

To build a reliable test environment, you'll need a few essential pieces. At the core are separate test databases. These should be filled with realistic, sanitized data that mirrors production scenarios while ensuring sensitive information stays protected.

Next, your API endpoints should reflect production settings but route to test servers. If your integrations rely on third-party services, set up their staging or sandbox versions - many providers offer these specifically for testing.

When third-party services lack adequate testing options, mock servers step in. They simulate external API responses, allowing you to test errors and edge cases. For instance, tools like Postman let you simulate response codes, delays, and data structures.

To track performance during testing, use API monitoring tools. Platforms like DataDog can provide detailed insights into response times, error rates, and system behavior under varying loads. Consistency is key during testing cycles. A study by Testsigma found that teams defining test scenarios in advance could cut down testing time by up to 60%. Tools like Docker help maintain uniform configurations across team members, ensuring predictable test results.

| Tool | Primary Use Case | Key Features |

|---|---|---|

| Postman | API Testing | Collections, Environment Management, Automation |

| StepCI | CI/CD Integration | Command-line Runner, Multi-protocol support |

| Playwright | Comprehensive Testing | State Management, Mocking, Flexibility |

Environment Isolation and Security

Keeping your test environment completely separate from production is non-negotiable. Environment isolation starts with using entirely different servers, databases, and networks to ensure no accidental overlap with production systems.

For data security, multiple layers of protection are essential. Use techniques like data masking and synthetic data generation to protect sensitive information. If you need to test with production-like data, ensure it's anonymized to safeguard personally identifiable information (PII), payment details, and proprietary data.

Restrict access with least privilege controls, such as RBAC (Role-Based Access Control), and store sensitive credentials like API keys and tokens in secrets management tools instead of hardcoding them into scripts .

To maintain stability, implement rate limiting and API throttling. These controls help you test how your system handles API limits imposed by third-party services while preventing abuse. Regular security assessments, including penetration testing and code reviews, can uncover vulnerabilities before they become bigger problems. Tools like IDS and IPS add another layer of protection against unauthorized access.

| Data Management Strategy | Implementation Approach | Key Benefit |

|---|---|---|

| Static Test Data | Use fixed datasets with known values | Predictable and consistent results |

| Data Validation | Automate checks before and after operations | Catch errors early |

| Environment Isolation | Use separate data stores for each environment | Avoids data conflicts between tests |

With these safeguards in place, you can confidently verify that each integration, from databases to webhooks, functions as intended.

Testing with Reform Integrations

For Reform integrations, replicate your entire form workflow from submission to destination. This means creating test forms that mirror your production setup, including multi-step forms, conditional routing, and all live environment integrations.

CRM integration testing is a critical step. Use sandbox or staging versions of your CRM system. For example, HubSpot offers a sandbox environment where you can test lead creation, contact updates, and deal progression without risking real customer data. Similarly, ensure form submissions populate custom fields and trigger workflows correctly in systems like Salesforce.

Pay special attention to lead enrichment workflows. Test scenarios to confirm that services like email validation, company data lookup, and lead scoring algorithms function properly. Known test email addresses and company domains are helpful here for consistent results.

For webhook testing, point endpoints to staging systems or dedicated test URLs. This ensures form submissions trigger downstream processes without impacting production workflows. Test both successful and failed webhook deliveries to confirm robust error handling.

When it comes to analytics and tracking, use separate tracking codes and measurement IDs in the test environment. This prevents test data from contaminating production analytics while still enabling you to verify conversion tracking and event logging.

To manage integrations effectively, consider using feature flags. These allow you to enable or disable specific integrations in different environments, making it easier to test new features without disrupting existing workflows. Plus, they simplify rollbacks if something goes wrong.

Finally, maintain data consistency across all connected systems. Use predefined datasets that remain stable throughout testing cycles. This approach makes it easier to identify whether unexpected behavior stems from integration changes or normal system variations.

Planning and Defining Test Cases

Once your test environment is ready, the next step is to focus on planning and organizing test cases. A clear, structured approach is crucial to ensure no critical failure points slip through the cracks.

Finding Critical Integration Points

Start by mapping out your core user journeys - these are the key paths users take through your system, often involving multiple APIs. For example, in Reform workflows, you’d trace the entire process from form submission to the final data destination.

Pay close attention to data handoffs between systems, as these are common trouble spots. Take a multi-step form submission: the data flows through validation, lead enrichment services, CRM synchronization, and analytics tracking. Each of these touchpoints introduces a potential failure point that must be tested thoroughly.

Authentication flows are another critical area, as they underpin all subsequent API calls. Test token generation, refresh cycles, and permission validation. A single failure here can disrupt the entire integration chain.

Think about the volume and frequency of each integration point. High-traffic endpoints, like form submissions or real-time validation APIs, require more rigorous testing than occasional batch processes. Considering that companies use an average of 40 different applications, prioritizing based on actual usage patterns is essential.

Third-party dependencies - such as payment processors, email services, and CRM systems - pose unique challenges because they operate outside your control. Test how your system handles their various response scenarios, including timeouts, rate limits, and service outages.

In Reform workflows, conditional routing logic also demands attention. When forms branch based on user responses, each path creates a unique integration scenario. Test every possible route to ensure data reaches the correct destination in the proper format.

Once you've identified these critical points, prioritize testing scenarios based on their potential risks and impact on your business.

Prioritizing Test Scenarios

Risk-based testing helps you focus your efforts where they matter most. Scenarios with a high likelihood of failure and significant business impact should take center stage.

Start by analyzing potential risks using historical data, defect reports, and input from stakeholders. For instance, if past issues have stemmed from payment gateway integrations, make them a top priority this time around.

Assess the business impact of each scenario. For example, a failed lead capture integration could result in major revenue losses, while a minor analytics discrepancy might have minimal consequences. Let these assessments guide your testing priorities.

Use a simple classification system - High, Medium, or Low priority - based on the likelihood and impact of each scenario. High-priority cases demand in-depth testing, covering multiple edge cases, while low-priority cases can be checked for basic functionality.

Performance-critical integrations should also rank high. For example, real-time form validation APIs must respond within milliseconds to maintain a smooth user experience. Test these under various load conditions to ensure they meet performance standards.

Regularly reassess your priorities as your system evolves. New risks may emerge, while others become less relevant. Periodic reviews will help you adjust your testing focus to align with current needs.

Also, consider the difference between user-facing and backend integrations. Frontend integrations that directly impact user experience usually require higher priority than background processes like internal data synchronization.

Documenting Test Cases

Once you've prioritized your test scenarios, document them in detail to ensure consistent and repeatable testing. Each test case should focus on a specific integration point, outlining inputs, expected results, and validation steps.

Using standardized templates can help keep your documentation clear and organized, making it easier for team members to understand and maintain. Include key elements such as objectives, endpoints, request details, expected outcomes, and validation criteria.

Define test case objectives clearly. For example, instead of "test user creation", write something like "verify successful user creation with valid email and password generates a 201 status code and returns a user ID." Specific objectives make it easier to determine whether a test passes or fails.

Document precise request specifications, including the endpoint URL, HTTP method, required headers, and request body format. Be sure to include authentication details and any special parameters. This level of detail ensures consistent execution across different team members and environments.

| Test Case Component | Required Details | Example |

|---|---|---|

| Objective | Specific functionality being tested | Verify CRM contact creation from form submission |

| Endpoint | Full URL and HTTP method | POST /api/v1/contacts |

| Authentication | Required tokens or credentials | Bearer token in Authorization header |

| Expected Response | Status code and response structure | 201 Created with contact ID in response body |

Clearly outline expected outcomes, covering both success and failure scenarios. Specify exact HTTP status codes, response body structures, and any side effects, such as database updates or webhook triggers. This clarity helps quickly identify when something goes wrong.

Use assertions to automate validation. For instance: "Assert status code equals 201", "Assert response contains user_id field", or "Assert email field matches input value".

Don't overlook error scenarios. Test cases should cover invalid inputs, missing authentication, malformed requests, and system timeouts. These edge cases often reveal vulnerabilities that normal usage might miss.

Lastly, include maintenance notes to provide context and dependencies for each test case. Document any special setup requirements, known limitations, or how the test case relates to others. This helps maintain clarity and ensures smooth collaboration.

Running API Integration Tests

Once you've established a test environment and documented your cases, it's time to run your API integration tests. This involves using secure authentication, automation, and error simulation to evaluate how your APIs perform under real-world conditions.

Authentication and API Requests

Authentication is the cornerstone of secure API integration. As Staff Engineer Adrian Machado explains:

"Protecting your APIs starts with reliable authentication. As cyber threats grow more advanced, having secure authentication measures in place is no longer optional - it's a must".

Modern authentication methods like JWT (JSON Web Tokens) or OAuth 2.0 provide a solid foundation for security and flexibility, far surpassing basic authentication. For example, when testing Reform's CRM integrations, ensure proper token generation, refresh cycles, and usage patterns are functioning as expected.

To further strengthen security:

- Enforce HTTPS for all API communications.

- Use test API keys with the least privilege principle, granting only the permissions necessary for testing.

- Regularly rotate credentials, especially during prolonged testing, and verify system stability during these updates.

Test both successful and failed authentication flows. Monitor access attempts to identify potential security issues early. Also, consider testing the sequence of API calls - authentication, data validation, and submission - to ensure the system handles disruptions in the expected order. Once authentication is verified, automation can help streamline the testing process.

Using Automation Tools

Automation is a game-changer for API testing. Tools like Postman (supporting REST and SOAP protocols) and Swagger (which generates test cases from API documentation) are excellent starting points. For performance testing, JMeter can simulate high-traffic scenarios, while frameworks like RestAssured and SoapUI handle more complex testing needs.

Integrating automated tests into your CI/CD pipeline ensures they run with every code change, catching issues early. Modular and independent test structures improve maintainability and prevent cascading failures. Use API mocking tools to simulate external services - this is particularly helpful for scenarios like Reform's lead enrichment integrations, where external responses can be mocked to test various conditions without relying on third-party APIs.

To enhance efficiency:

- Parameterize tests: Run the same logic with different input data, which is especially useful for testing multiple form submissions.

- Run tests in parallel: This significantly reduces execution times.

- Keep test scripts updated to reflect API changes.

- Implement robust error handling and detailed logging to simplify debugging.

Once automation is in place, it's time to challenge your API with error and stress tests.

Testing Error Scenarios and High Traffic

APIs face challenges that extend beyond normal operations. Testing error scenarios and high-traffic conditions ensures your integrations remain stable, even under stress.

Simulate negative scenarios such as:

- Invalid inputs or missing fields.

- Oversized payloads or malformed JSON.

- Unauthorized access attempts.

For example, test how the API handles empty fields, data that exceeds expected limits, or corrupted data formats. Push the system further by sending bursts of requests to evaluate rate limits and throttling policies, ensuring the API responds with appropriate error messages when limits are breached.

Prince Onyeanuna highlights the importance of chaos testing:

"Chaos testing is a technique that deliberately introduces failures into your API to see how well it recovers. Instead of assuming everything will work perfectly, you simulate real-world issues - like network disruptions, high latency, or harmful data - to ensure your API can handle them. The goal isn't just to break things but to make your API more resilient".

Introduce controlled disruptions, like network delays or database outages, to observe how your API reacts. Test scenarios where response times exceed acceptable thresholds and ensure the API provides clear and actionable error messages. Simulate user loads to mimic multiple simultaneous users, monitoring key metrics such as response times and error rates to identify bottlenecks.

Start with low-impact tests, such as minor network delays or isolated failed requests, and gradually increase complexity by combining multiple failure scenarios. Use logging and monitoring tools to track performance, error rates, and recovery mechanisms throughout testing. Implement strategies like exponential backoff for retries, circuit breakers to prevent cascading failures, and clear error messages for users.

Finally, test how your API handles slow responses, temporary connection losses, and rate limits. For integrations like Reform, ensure temporary outages don't lead to permanent data loss by validating that the system appropriately backs off and retries without overwhelming external services. Automating these scenarios within your CI/CD pipeline ensures continuous validation of your API's resilience.

sbb-itb-5f36581

Validating and Monitoring Results

Once testing is complete, the next step is to validate the results and monitor system health. This process transforms raw data into actionable insights, ensuring your APIs function as expected and perform reliably. The validation techniques outlined below work hand-in-hand with automated monitoring tools, which are discussed later.

Response Validation Methods

Response validation ensures your API behaves as intended, delivering accurate data in the correct format. Here are three key methods to validate API responses:

-

Status Code Validation: This checks whether the API returns the expected HTTP status codes. For example, in Postman, you can use the following script:

pm.test("Status code is 200", function () { pm.response.to.have.status(200); }); -

Response Time Validation: This measures how quickly the API responds. While acceptable response times depend on the use case, many users expect responses within 200–500 milliseconds. Here's an example:

pm.test("Response time is less than 200ms", function () { pm.expect(pm.response.responseTime).to.be.below(200); }); - Data Validation: This focuses on the actual content returned by the API, ensuring it meets expected formats and includes accurate values. For instance, if you're using Reform's lead enrichment features, you might validate that enriched contact data includes a properly formatted email address, a valid phone number, and complete company details.

| Validation Method | Description |

|---|---|

| Status Code Validation | Verifies the API returns the correct HTTP status |

| Response Time Validation | Measures the speed of API responses |

| Data Validation | Ensures data accuracy and proper formatting |

To dig deeper into data validation, use JSON validators or custom scripts to confirm that the response structure aligns with expectations. Test both successful and error scenarios. For example, if a form submission fails due to missing required fields, the response should clearly indicate which fields need correction.

These validation techniques tie back to earlier testing steps, creating a feedback loop that strengthens system reliability.

Automating Result Analysis

As your test suite grows, manual analysis becomes inefficient. Automation steps in to streamline the process, making it faster and more consistent.

Modern API monitoring tools offer real-time analytics, synthetic testing, distributed tracing, and automated alerts. These features not only help identify issues early but also improve overall performance and user experience. With APIs handling 83% of web traffic, automated result analysis is more critical than ever.

When choosing monitoring tools, prioritize those that integrate seamlessly into your workflow. For instance:

- Postman: Supports collection-based monitoring for individual requests or entire workflows.

- SigNoz: Provides open-source, full-stack application performance monitoring (APM).

Automated scripts can analyze results by comparing responses to expected outcomes and flagging anomalies. Setting up alerts that notify your team through communication tools ensures that issues are addressed promptly - often before users even notice.

Synthetic monitoring simulates user interactions, while real user monitoring (RUM) captures actual user experiences. Together, they provide a complete picture of API performance. For Reform integrations, this could mean tracking how quickly CRM data syncs or how reliably email validation services respond under heavy traffic.

Incorporating automated analysis into your CI/CD pipeline helps catch performance regressions early. This ensures new code changes don’t disrupt existing integrations or degrade performance, feeding directly into your continuous monitoring efforts.

Continuous Monitoring and Metrics

The work doesn’t stop once your APIs go live. Continuous monitoring ensures long-term reliability by tracking performance trends and catching issues before they escalate.

Set clear monitoring goals and focus on key metrics like response time, uptime, error rate, throughput, and request volume. Establish alert thresholds for these metrics to address potential problems early.

Go beyond basic availability checks by validating functional uptime through CRUD operations (Create, Read, Update, Delete). For example, with Reform’s form integrations, you’ll want to confirm that new submissions are captured accurately, data retrieval is reliable, updates are processed correctly, and deletions function as intended.

Regularly review API logs, dashboards, and reports to spot patterns and identify areas for improvement. For instance, in a SaaS platform spanning AWS (US-East), Azure (Europe-West), and Google Cloud Platform (Asia-Pacific-Southeast), customers are often categorized into tiers based on importance.

When a Tier 1 customer experiences an outage, the alerting system immediately notifies the dedicated support team, allowing them to prioritize and resolve the issue quickly.

Integrate monitoring into every stage of your CI/CD pipeline - not just production. This proactive approach helps catch issues earlier in development, where fixes are easier and less costly. It also ensures your team stays informed about system health without being overwhelmed by excessive alerts.

Best Practices and Troubleshooting

After diving into the nuts and bolts of test planning and execution, it’s time to focus on long-term success. API integration tests aren’t foolproof - failures are bound to happen. But the real game-changer is how quickly and effectively teams can identify, resolve, and learn from these hiccups. By honing troubleshooting skills and sticking to proven maintenance strategies, you can keep your test suite running smoothly and delivering value.

Debugging and Fixing Failures

When an API integration test fails, tackling the issue systematically can save both time and frustration. Begin with the obvious suspects before delving into more complex debugging.

One of the most common issues? Authentication failures. For example, a grocery company faced token refresh problems during peak traffic. The fix? Adjusting the token refresh cycle and adding retry logic to handle the load effectively.

Another frequent culprit is data format mismatches. A restaurant’s point-of-sale system ran into trouble when parameter mismatches caused API rejections. The solution was to implement a middleware layer to rewrite requests on the fly. Similarly, a pharmacy solved address format inconsistencies that were causing tracking update failures by enforcing a standardized format.

The key to resolving failures lies in identifying the root cause. Is the problem with your application, the network, or the API provider? Tools like API monitoring and logging are invaluable for tracing interactions and debugging errors. On the client side, validate user input to prevent errors and provide user-friendly error messages instead of cryptic technical ones.

For specific integrations, like Reform, this could mean ensuring form submission data is reaching your CRM as expected or verifying that email validation responses are timely and accurate.

Once you’ve addressed the failures, maintaining your test suite becomes the next priority to prevent similar problems from cropping up again.

Maintaining Test Scripts

Keeping your test scripts up to date is essential as APIs evolve over time. A well-maintained suite ensures reliability and reduces troubleshooting headaches.

Start by writing clear, modular, and independent test scripts. Each test should include all the necessary data and resources to run on its own, avoiding situations where one test’s results impact another. This approach simplifies debugging and prevents cascading issues.

Break tests into reusable components. Instead of creating separate tests for every scenario, build modular subtests for common actions like authentication, data validation, or error handling. Keyword-driven testing can help streamline this process by creating shared action sets.

Keep your test data separate from the scripts by storing it in external files or databases. This makes it easier to update test scenarios without touching the code. Parameterizing tests to handle different input data also expands your test coverage without duplicating effort.

When APIs change, update existing test cases instead of adding new ones. This avoids redundancy and keeps your suite lean. Regularly review and refactor your tests to remove outdated cases and improve efficiency.

Finally, use version control for your test scripts and integrate them into your CI/CD pipeline. This ensures rapid feedback and helps catch issues early in the development cycle.

While maintaining the scripts is crucial, documenting your work ensures the entire team benefits from lessons learned.

Documentation and Lessons Learned

Good documentation turns individual fixes into team-wide knowledge. Without it, valuable insights can be lost, and new team members may struggle to catch up.

Internal documentation is your team’s lifeline. It should explain not just how tests work but also why specific approaches were chosen. This context helps new developers get up to speed without wading through old code.

Keep a record of failure cases and their solutions. For instance, when a grocery business found that fresh produce orders weren’t appearing on drivers’ schedules due to missing customer phone numbers in the API payload, they documented the issue and the fix. This prevented the same problem from recurring and gave the team a reference for similar challenges.

Make testing an integral part of your deployment process and document it thoroughly. Running tests in a sandbox environment before going live can catch mismatches early. Clearly outline which tests need to pass at each stage and specify the steps to follow if something fails.

Finally, monitor API activity regularly and document patterns. Tracking response times, error rates, or unusual traffic can help you spot anomalies and establish what "normal" behavior looks like. Over time, this data becomes a valuable resource for troubleshooting and optimization.

Conclusion

API integration testing plays a critical role in ensuring that software operates smoothly and reliably. When executed effectively, it helps prevent system failures, maintains accurate data flow, and supports the growth of your applications.

Key Takeaways

API integration testing offers valuable insights into the health of your systems. Careful planning upfront can significantly reduce testing time and uncover issues early. For instance, organizations that define test scenarios in advance can cut testing time by up to 60%. This proactive approach ensures problems are addressed before they impact production.

Automation is another cornerstone of effective testing. As highlighted by the Keploy Team, automating repetitive tests not only saves time but also minimizes human error. It ensures consistent test coverage, which is essential for maintaining reliability.

The testing process itself is straightforward yet powerful: plan, execute, validate, and maintain. Each step builds upon the last, creating a strong safety net for your integrations. Testing error scenarios and boundary conditions is just as important as testing the "happy path." After all, real-world users often behave unpredictably, and your APIs need to handle these situations gracefully.

Security and performance testing are non-negotiable. Verifying authentication, authorization, and encryption, while also testing for load and stress, ensures your APIs can handle real-world demands. With over 80% of public web APIs being RESTful, adhering to established best practices is crucial for maintaining consistency and reliability.

These strategies serve as a roadmap for refining your testing approach.

Next Steps for Your Testing Strategy

To put these principles into action, start by integrating API tests into your CI/CD pipeline. This continuous validation approach helps teams catch bugs early when they are easier and cheaper to fix.

Prioritize critical integrations first. Customer-facing APIs often have the most significant impact on your business, making them a top priority for thorough testing.

Consider adopting contract testing to ensure compatibility between API versions, especially when dealing with third-party services that might update independently of your development cycle. This ensures smooth communication between systems as they evolve.

Real-time monitoring is also essential. Keep an eye on uptime, response times, and error rates to extend your testing efforts into production. As Adrian Machado, Staff Engineer at Zuplo, wisely points out:

"End-to-End (E2E) API testing ensures your APIs work seamlessly across entire workflows".

This monitoring acts as an early warning system, helping you address issues before they escalate.

FAQs

What are the biggest challenges in API integration testing, and how can they be resolved?

API integration testing can be tricky, with challenges such as managing dependencies, setting up testing environments, working with legacy systems, ensuring data consistency, and navigating poor documentation. On top of that, security risks and scalability concerns can make the process even more complex.

To tackle these hurdles, start with detailed planning and clear documentation. Implement strong error-handling mechanisms to quickly identify and resolve problems. Make sure your testing environments are properly configured and that dependencies are managed with care. Lastly, following security best practices will help ensure a smoother and safer testing process. By addressing these factors early, you can make your API testing more efficient and dependable.

How can automation tools improve the efficiency and accuracy of API integration testing?

Automation tools can significantly streamline API integration testing by taking over tedious manual processes, allowing for faster and more consistent test execution. These tools can easily blend into CI/CD pipelines, ensuring APIs are tested continuously throughout the development cycle. The result? Time saved and fewer chances for human error to creep in.

Some advanced tools, particularly those equipped with AI-driven features, can even take things a step further. They can automatically generate test cases, spot irregularities, and adapt to API changes. This not only boosts accuracy but also ensures even the most complex scenarios are thoroughly tested, enabling teams to deliver dependable and high-performing APIs with less effort.

How can I ensure API security and performance during integration testing?

To keep API security intact during integration testing, it's essential to implement strong authentication protocols like OAuth 2.0. Encrypting data in transit using TLS ensures sensitive information stays protected, while validating all inputs helps ward off vulnerabilities such as injection attacks. These measures work together to shield your API from unauthorized access and data breaches.

When it comes to performance, running load tests under both normal and peak usage conditions is crucial. This helps pinpoint bottlenecks and fine-tune API endpoints for smoother operation. Strategies like caching and optimizing how requests are handled can make a big difference in response times. Consistent testing and ongoing monitoring are critical for maintaining high levels of security and performance throughout your API's lifecycle.

Related posts

Get new content delivered straight to your inbox

The Response

Updates on the Reform platform, insights on optimizing conversion rates, and tips to craft forms that convert.

Drive real results with form optimizations

Tested across hundreds of experiments, our strategies deliver a 215% lift in qualified leads for B2B and SaaS companies.

.webp)