Manual Accessibility Testing for Forms

Manual accessibility testing for forms ensures usability for everyone, especially users with disabilities. While automated tools catch basic issues like missing alt text or low contrast, they miss nuanced problems like unclear error messages, confusing tab orders, or improper screen reader announcements.

Here’s what manual testing involves:

- Keyboard Navigation: Test forms without a mouse to ensure logical tab flow, visible focus indicators, and no "focus traps."

- Screen Reader Compatibility: Verify proper label announcements, error message clarity, and logical reading order with tools like NVDA, JAWS, or VoiceOver.

- Visual Clarity: Check color contrast (4.5:1 for text, 3:1 for large text), ensure usability at 200% zoom, and avoid relying on color alone for error cues.

- Error Messages: Confirm errors are tied to fields, announced by assistive technologies, and provide clear, actionable guidance.

Why it matters: Automated scans miss 50-80% of accessibility issues. For example, the FAFSA form passed automated tests but failed manual checks in 2022, showing unreadable error messages to screen readers. After fixes, satisfaction among users with disabilities rose by 27%.

Tools to use: Screen readers (NVDA, JAWS, VoiceOver), browser extensions (WAVE, Axe), and color contrast checkers (WebAIM Contrast Checker). Combining these tools with manual techniques ensures forms meet WCAG 2.1 and Section 508 standards.

Key takeaway: Manual testing isn’t just about compliance - it improves usability for all, boosting user satisfaction and form completion rates.

Test a form for accessibility checklist and demo

Manual Testing Techniques for Form Accessibility

These methods are designed to uncover barriers that might prevent users from completing forms. Each technique focuses on a specific aspect of accessibility, from keyboard-only navigation to ensuring compatibility with screen readers. Let’s dive into the key techniques that can help make forms more accessible.

Testing Keyboard Navigation

This method involves using just the keyboard to interact with the form - no mouse allowed. The idea is to ensure that users can move through the form elements seamlessly. Use the Tab key to navigate forward and Shift + Tab to move backward. Every key should perform as expected.

As you test, check that all interactive elements - like input fields, buttons, checkboxes, radio buttons, and dropdown menus - are accessible via the keyboard. The focus should follow a logical flow, typically from top to bottom and left to right, matching the visual layout of the form. It’s also crucial to confirm that each focused element has a clear visual indicator, such as a border or color change, with enough contrast to be easily noticeable.

Some common issues to look out for include missing focus indicators, elements that can’t be accessed using the keyboard, a tab sequence that feels out of order, and focus traps that prevent users from moving past certain elements. These challenges can be particularly frustrating for people who rely on keyboard navigation due to motor disabilities.

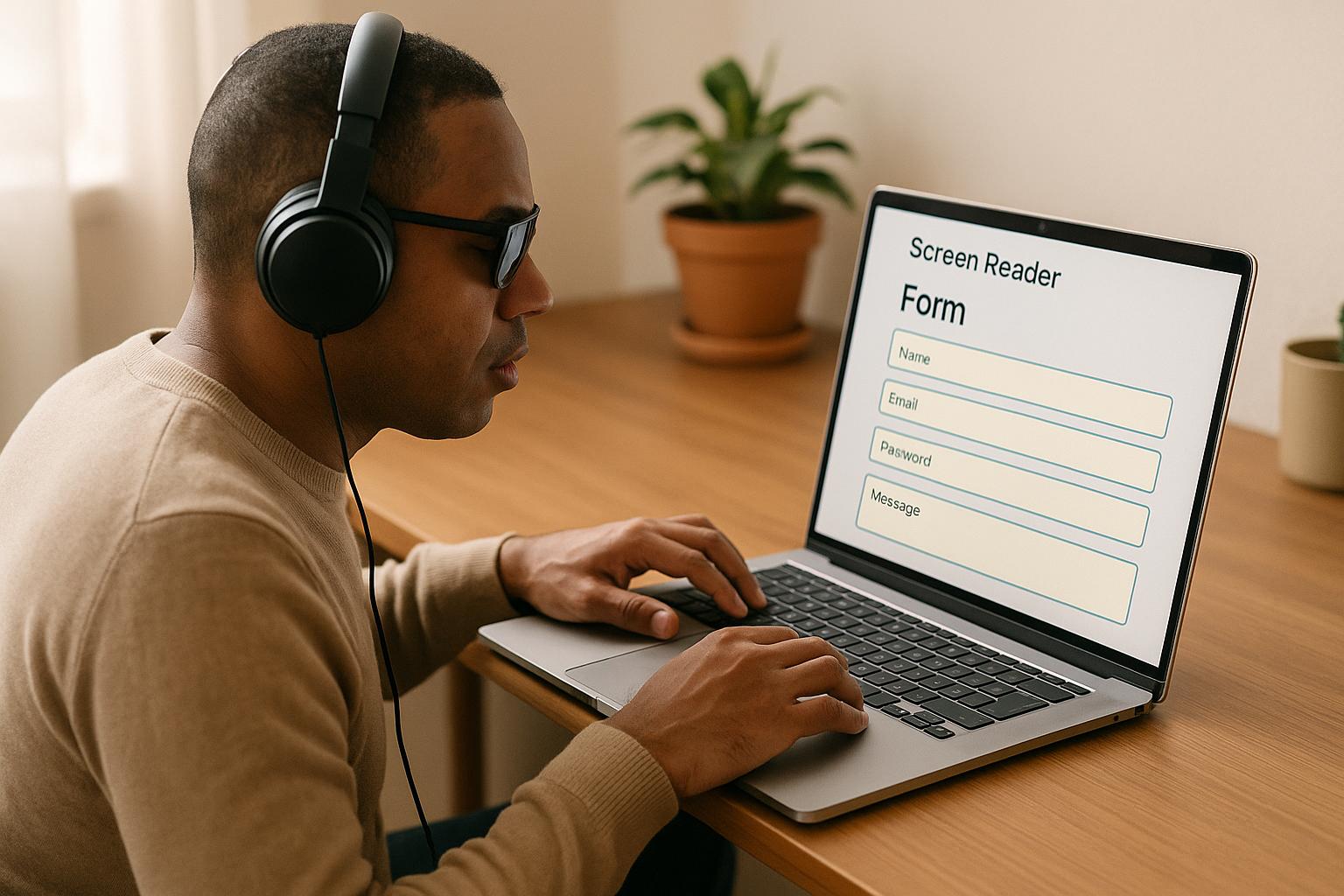

Testing with Screen Readers

Screen readers offer a way to experience the form as a visually impaired user. Popular screen readers include NVDA (free for Windows), JAWS (Windows), and VoiceOver (built into macOS and iOS).

When testing with a screen reader, ensure that every form field has a proper label that the screen reader can announce. For example, instead of a vague "edit text", it should say something like "email address, edit text" or "first name, required, edit text." This gives users the context they need to interact with the form effectively.

Check that instructions and error messages are announced at the right time. For instance, when a user focuses on a required field, the screen reader should indicate that it’s required. If there’s an error, the screen reader should announce it immediately when the user navigates to the problematic field. Also, make sure the reading order aligns with the visual layout of the form, and that dynamic updates - like error messages or success notifications - are conveyed instantly.

Checking Visual Elements and Color Contrast

This part of testing ensures that users with low vision or color blindness can interact with the form without difficulty. While automated tools for checking color contrast are helpful, they often fall short with complex backgrounds.

Manually test the contrast between text and its background for all form elements, including labels, input text, placeholder text, and error messages. The contrast ratio for normal text should be at least 4.5:1, while large text (18pt or 14pt bold) requires a minimum ratio of 3:1.

Avoid relying on color alone to communicate important information. For example, error messages shouldn’t depend solely on red text; they should include descriptive text as well. Similarly, required fields should be marked with explicit text like "required" rather than relying on visual cues like asterisks or color changes.

Also, check the form’s usability at 200% zoom. All text should remain legible, and no elements should overlap or disappear off-screen. Testing under different lighting conditions and across various devices is equally important, as visual appearances can vary significantly.

Testing Error Messages and Form Validation

Error messages play a critical role in helping users fix mistakes. This testing ensures that these messages are clear, descriptive, and accessible.

First, confirm that error messages are programmatically linked to their respective fields so they can be announced immediately by assistive technologies. Additionally, the messages should provide actionable guidance. For instance, instead of a vague "Invalid input", use something more specific like "Please enter a valid email address" or "Password must be at least 8 characters long." This added clarity is especially helpful for users with cognitive disabilities.

Make sure error messages stay visible and prevent form submission until the issues are resolved. When a form is submitted successfully, the success message should be easy to spot and also conveyed audibly for users relying on assistive technology. This confirmation reassures users that their submission went through as intended.

Tools for Manual Accessibility Testing

Having the right tools can make manual accessibility testing much more effective. While automated scanners can catch some issues, they often miss the nuances that manual testing reveals. Using manual testing tools allows you to experience forms in the way users with disabilities do. These tools also provide insights into common accessibility problems and help ensure compliance with standards like WCAG and Section 508. Below, we’ll explore key tool categories that can support your manual testing efforts.

Screen Readers and Assistive Technology

Screen readers and assistive tools are essential for understanding how visually impaired users navigate forms. These tools convert on-screen content into speech or braille, offering a firsthand look at the user experience. Popular options include:

- NVDA: A free, open-source screen reader for Windows, often recommended for accessibility testing.

- JAWS: A widely used screen reader for Windows, though it requires a paid license.

- VoiceOver: Built into macOS and iOS, making it a convenient option for Apple users.

These tools help verify critical aspects of accessibility, such as whether labels are announced properly, required fields are identified, and error messages are communicated effectively. They also allow you to test keyboard-only navigation, ensuring users can interact with forms without a mouse.

Browser Extensions for Accessibility

Browser extensions are another valuable resource for manual testing. They provide quick visual feedback and help identify technical issues that might otherwise go unnoticed. Some popular options include:

- WAVE: Highlights issues like missing labels, low contrast, and improper tab order.

- Axe: A versatile tool that flags ARIA attribute problems and other accessibility concerns.

- Pa11y: While primarily focused on command-line testing, it also integrates with browsers for easier use.

For instance, a browser extension might highlight a missing label on a form field. You can then manually confirm whether the replacement label works as intended, ensuring it’s correctly announced by a screen reader. Keep in mind that automated tools often catch only 20–50% of accessibility issues. Use them as a starting point, not a complete solution, and pair them with tools for testing color contrast to cover more ground.

Color Contrast Testing Tools

Color contrast tools are critical for ensuring readability under various conditions. They allow you to test whether your form’s foreground and background colors meet WCAG standards. Popular tools include:

- Accessible Color Contrast Checker

- WebAIM Contrast Checker

These tools let you evaluate dynamic elements like hover states, focus indicators, and error messages. It’s important to test not only the main text but also placeholder text, disabled fields, and button states. Contrast issues can become more apparent under different lighting or on various devices, so thorough testing is key.

A Comprehensive Testing Approach

Combining screen readers, browser extensions, and color contrast tools provides a well-rounded manual testing strategy. For example, screen readers can uncover audio feedback issues, extensions can flag technical problems, and contrast tools ensure visual clarity. This approach addresses many of the barriers users might face, including the 25% of accessibility issues tied to keyboard support alone.

Platforms like Reform, which prioritize accessibility in their form-building features, can help reduce common problems from the start. This allows your manual testing to focus on refining details rather than fixing fundamental issues, ultimately creating a smoother experience for all users.

sbb-itb-5f36581

Form Accessibility Best Practices and Common Problems

Creating accessible forms requires tried-and-true methods while steering clear of common mistakes. By combining manual testing techniques with thoughtful design, you can ensure forms are usable by everyone.

Best Practices for Accessible Forms

Adhering to these practices can make forms more inclusive and effective:

- Use clear labels: Labels like "Email address" are much more helpful than vague prompts like "Enter here."

- Enable keyboard navigation: Ensure users can complete the form without needing a mouse.

- Provide visible focus indicators: Use distinct borders or noticeable color changes to show where the user is focused.

- Link error messages to fields: Use ARIA attributes so screen readers can announce errors clearly, and avoid generic language.

- Maintain proper color contrast: For readability, aim for a contrast ratio of at least 4.5:1 for regular text and 3:1 for larger text.

- Test with real users: Regularly involve people with disabilities to uncover usability issues that automated tools might miss.

Focus indicators are essential for keyboard users to track their position within a form. Default browser indicators might not be sufficient, so enhancing them with bold borders or contrasting colors can make a big difference.

Error handling is another critical area. When users encounter an issue, screen readers should announce both the field and the error message. Avoid generic messages like "Error" - be specific about what needs fixing.

Color contrast should be tested in various environments, such as bright sunlight or on different devices, to ensure readability remains consistent.

Finally, testing with users who have disabilities provides insights into real-world challenges. Combined with regular accessibility audits, this approach ensures forms are both compliant and user-friendly.

Even with these strategies, some common pitfalls can still pose barriers to accessibility.

Common Form Accessibility Problems

Here are some frequent issues that can hinder a form's usability:

- Missing or incorrect labels: Screen readers may only announce fields as "edit text" without proper labels, leaving users unsure of what input is required.

- Weak focus indicators: If focus indicators are too subtle or inconsistent, keyboard users can lose their place - especially in complex forms with multiple sections.

- Inaccessible error messages: Problems arise when error messages aren’t announced by screen readers, rely solely on color changes, or use vague text like "Error" without specifying the issue.

- Poor color contrast: Text that looks fine on a monitor might be unreadable on a mobile screen in sunlight or for users with visual impairments. This applies to placeholder text, disabled fields, and button states that use light colors.

- Unclear button and link text: Labels like "Submit" or "Click here" lack context. More descriptive text, such as "Submit contact form" or "Download accessibility guide", helps all users understand the purpose of each action.

The table below highlights how manual testing can uncover these problems, which often go unnoticed by automated tools:

| Common Problem | Manual Testing Approach | Why Automated Tools Miss It |

|---|---|---|

| Missing/inaccurate labels | Screen reader testing | Cannot assess label clarity or context |

| Poor keyboard navigation | Keyboard-only testing | Cannot check logical focus order |

| Unclear error messages | Screen reader and keyboard testing | Cannot verify announcement timing |

| Insufficient color contrast | Manual visual inspection | Struggles with gradients and images |

Tools like Reform integrate accessibility features to address these issues upfront. This allows manual testing to focus on enhancing the overall user experience rather than fixing basic problems.

Being aware of these challenges sets the stage for a systematic manual testing process that ensures your forms are accessible to all users.

Step-by-Step Manual Testing Process

A thorough manual testing process can uncover issues that automated tools might miss, ensuring a more complete and user-friendly experience. By combining automated scans with hands-on evaluations, you can create a comprehensive picture of accessibility.

Setup and Initial Automated Scan

Before starting manual testing, set up a clear testing environment. Identify the types of forms you'll be working on - whether it's a registration form, a contact form, a checkout process, or a multi-step application. Map out the primary user paths, focusing on how users typically navigate the form and the actions they need to take.

Kick things off with an automated scan using tools like WAVE or Axe. These tools are great for detecting basic issues such as missing labels, simple color contrast problems on solid backgrounds, and missing alt text. While automated tools are helpful for identifying these surface-level problems, they’re only part of the solution. For example, the WebAIM Million report found that 96.3% of home pages in 2023 had detectable WCAG 2 failures, with form field labeling and error identification being some of the most frequent issues. However, automated tools typically catch only 20–50% of accessibility problems, which highlights the importance of manual testing.

Manual Testing Steps

Manual testing involves a structured process that checks keyboard navigation, screen reader compatibility, and visual clarity.

Start with keyboard navigation testing. Use only the keyboard to navigate the form, ensuring that all interactive elements are accessible, the focus order makes sense, and focus indicators are clearly visible.

Next, conduct screen reader testing with tools like NVDA (Windows), VoiceOver (macOS), or JAWS. Navigate the form as a user would, listening to how each field, label, and instruction is announced. Pay close attention to error messages - they should be announced clearly and tied to the correct fields programmatically.

Finally, perform a visual assessment. Check for color contrast on gradients and images, ensure focus indicators are visible against different backgrounds, and confirm that field grouping and instructions are clear. Verify that error messages are easy to see and compatible with screen readers.

In 2022, the University of Missouri tested the accessibility of student portal forms using NVDA and keyboard-only navigation. They discovered missing focus indicators and unlabeled error messages - issues that automated tools didn’t catch. After fixing these problems, satisfaction scores among students with disabilities rose by 27% (Source: University of Missouri Digital Access, 2022).

Recording Results and Retesting

Documenting issues is a critical part of the process. For each problem, provide a clear description, steps to reproduce it, and screenshots or screen reader outputs when applicable. Use a standardized template or issue tracker to ensure consistency.

Once documented, prioritize the issues based on their impact and alignment with WCAG standards. High-priority issues, like missing labels or inaccessible error messages, should be addressed first. Link each issue to the relevant WCAG criteria, and include actionable recommendations for fixes.

After implementing fixes, retest the affected areas using the same manual steps to confirm the problems are resolved. This step is crucial, as fixes can sometimes introduce new issues. Update your documentation to reflect the current status of each problem, creating a clear record of improvements.

| Testing Phase | Key Activities | Documentation Requirements |

|---|---|---|

| Initial Setup | Identify forms, map user paths, run automated scans | Test plan, scope definition, baseline report |

| Manual Testing | Test keyboard navigation, screen readers, visuals | Issue descriptions, reproduction steps, screenshots |

| Results & Retesting | Prioritize issues, verify fixes, track progress | Prioritized issue list, retest results, status updates |

With all issues documented and resolved, the next step is to integrate accessibility practices into the design process.

How Reform Supports Accessible Form Design

Reform takes accessibility seriously, embedding it into every stage of form design. The platform offers templates with proper label associations, visible focus indicators that work seamlessly across different themes, and ARIA support for complex interactions like conditional logic and multi-step processes.

Reform also helps users create forms with accessible color contrast by providing real-time visual feedback during the design process. Logical tab order is managed automatically, and error messages are generated with proper programmatic associations, ensuring they’re screen reader–friendly.

For multi-step forms and conditional routing, Reform ensures that focus management and screen reader announcements work smoothly as the form dynamically updates. This proactive approach reduces the time needed for manual testing and helps businesses stay compliant with WCAG guidelines and U.S. accessibility laws like Section 508 and the ADA. By addressing fundamental accessibility needs during the design phase, manual testing can focus on refining the user experience rather than fixing basic issues.

Conclusion

Manual accessibility testing transforms forms into inclusive experiences, ensuring they work effectively for everyone. While automated tools can identify basic issues, they often miss more nuanced barriers - like whether error messages make sense to screen reader users or if focus indicators are visible against busy backgrounds. These subtleties demand human judgment and hands-on testing, as outlined earlier in this discussion.

The business case for accessible forms is hard to ignore. Consider this: 1 in 4 U.S. adults has a disability, making accessibility a priority for a sizable audience. In 2022, a leading U.S. bank saw a 15% increase in form submissions from visually impaired users after fixing focus and error messaging issues. These improvements came directly from the thorough manual testing methods described above.

Accessible forms go beyond compliance with WCAG 2.1 and Section 508 standards - they create smoother, more enjoyable experiences for everyone. This universal design philosophy has tangible benefits, including higher conversion rates. As Reform puts it, businesses can "Enjoy higher completion rates" by ensuring forms are "easy to navigate for all users, complying with accessibility standards to reach a wider audience". Achieving these benefits requires ongoing effort.

Accessibility testing isn’t a one-time task. As forms evolve - whether through design updates, new features, or platform changes - accessibility can slip without regular checks. A sustainable approach combines automated scans with manual techniques like keyboard navigation tests, screen reader evaluations, and visual reviews, ensuring forms remain inclusive over time.

FAQs

Why is manual accessibility testing important for forms, and how does it complement automated testing?

Manual accessibility testing plays a crucial role in making sure forms are easy to use and inclusive, especially for individuals with disabilities. While automated tools are great for spotting common issues, they often miss subtler problems - like vague instructions or difficulties with keyboard navigation - that only manual testing can uncover.

By prioritizing how forms work in practical, everyday scenarios, manual testing ensures they meet accessibility standards and offer a smooth experience for everyone. This not only helps you reach a wider audience but also shows a genuine dedication to inclusivity and meeting compliance requirements.

What challenges do people with disabilities face when using forms, and how can manual testing help improve accessibility?

People with disabilities often face hurdles when trying to use forms. These can include trouble navigating with a keyboard, confusion caused by unclear labels, or difficulty interacting with elements like buttons or dropdown menus that aren't designed with accessibility in mind. Such obstacles can turn a simple task into a frustrating or even impossible experience.

This is where manual accessibility testing becomes crucial. By carefully examining how well a form works with tools like screen readers and supports keyboard navigation, testers can pinpoint and address these barriers. The goal is to make forms easy to use, compliant with accessibility guidelines, and welcoming for everyone.

How can I make form error messages accessible for users who rely on screen readers?

To make your form error messages more accessible, focus on using clear and precise language that explains both the problem and how to resolve it. Position the error messages close to the relevant fields and link them to the input using attributes like aria-describedby or aria-live. This ensures that screen readers can detect and announce the errors effectively.

It's also important to combine color with text to highlight errors. Relying solely on color can create barriers for users with visual impairments. To ensure everything works as intended, manually test your forms with screen readers. This step helps confirm that error messages are both understandable and properly announced.

Related Blog Posts

Get new content delivered straight to your inbox

The Response

Updates on the Reform platform, insights on optimizing conversion rates, and tips to craft forms that convert.

Drive real results with form optimizations

Tested across hundreds of experiments, our strategies deliver a 215% lift in qualified leads for B2B and SaaS companies.

.webp)