AI Data Privacy: Navigating GDPR and CCPA

AI systems process vast amounts of personal data, creating unique privacy challenges under laws like GDPR and CCPA. Here's what you need to know:

- GDPR (EU/UK): Requires opt-in consent, transparency in automated decisions, and allows individuals to object to AI-driven decisions. Fines can reach up to €20 million or 4% of global turnover.

- CCPA (California): Uses an opt-out model, mandates transparency about AI logic, and grants rights like data deletion and opting out of automated decision-making. Fines range from $2,500 to $7,500 per violation.

- AI Risks: Issues like algorithmic opacity, data repurposing, and data spillovers complicate compliance. For example, training AI on personal data can make deletion requests costly and technically complex.

- Key Differences: GDPR emphasizes safeguards like human oversight, while CCPA focuses on consumer rights like opting out of data sharing and automated decisions.

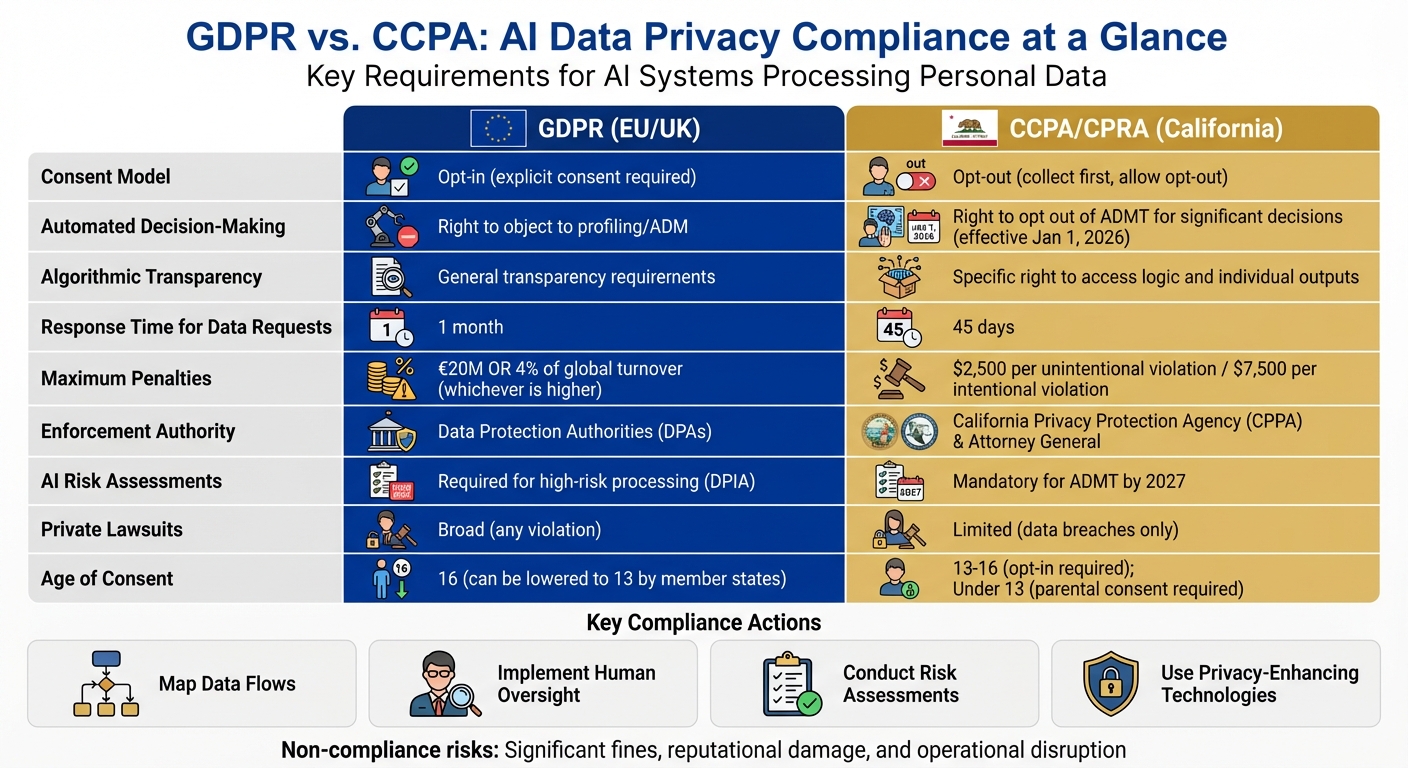

Quick Comparison:

| Feature | GDPR | CCPA/CPRA |

|---|---|---|

| Consent Model | Opt-in | Opt-out |

| Automated Decisions | Right to object | Right to opt out (2026 rule) |

| Penalties | €20M or 4% turnover | $2,500–$7,500 per violation |

| Data Requests Response | 1 month | 45 days |

| Risk Assessments | Required for high-risk AI | Mandatory for AI by 2027 |

To comply, businesses must map data flows, ensure human oversight, and use privacy-enhancing technologies like synthetic data or differential privacy. Ignoring these regulations can lead to steep fines and reputational damage.

GDPR vs CCPA AI Compliance Requirements Comparison

Key Privacy Laws Impacting AI (GDPR, CCPA) | Exclusive Lesson

GDPR vs. CCPA: Key Differences

Both GDPR and CCPA aim to protect personal data, but their approaches differ significantly - especially when it comes to AI applications across different regions.

User Rights and Consent Requirements

One major distinction lies in how consent is handled. GDPR follows an opt-in model, meaning businesses must obtain clear, informed, and specific consent before processing personal data. For AI, this means explicit user consent is required before using their data for training purposes. On the other hand, CCPA uses an opt-out model. Businesses can collect and process data without prior consent for users over 16, but they must provide a visible "Do Not Sell or Share My Personal Information" link on their websites. For users aged 13 to 16, opt-in consent is necessary, while children under 13 require parental approval.

The two regulations also differ in their approach to automated decision-making. Under GDPR’s Article 22, individuals can object to decisions made solely by automated processing. California’s new rules, effective January 1, 2026, allow consumers to opt out of Automated Decisionmaking Technology (ADMT) for significant decisions. However, businesses can bypass this opt-out requirement by offering a human review process to overturn automated decisions. Additionally, while GDPR requires transparency about general data processing, CCPA goes a step further. It mandates businesses to provide pre-use notices for ADMT systems and, upon request, explain the system’s logic and how it processes individual data. As Alston & Bird noted:

This new 'algorithmic transparency' standard makes it important for businesses to document sufficient technical information about the operation of the ADMT before first use.

Both frameworks grant users the right to request data deletion, but in AI contexts, this could mean retraining models to remove learned parameters tied to that data. These differences in consent and decision-making create distinct compliance challenges for businesses.

Legal Requirements and Penalties

The enforcement and penalty structures also vary. GDPR is enforced by national Data Protection Authorities (DPAs) across EU member states, while CCPA is overseen by the California Privacy Protection Agency (CPPA) and the California Attorney General. GDPR violations can lead to fines of up to €20 million or 4% of global turnover. Meanwhile, CCPA imposes fines of $2,500 for unintentional violations and $7,500 for intentional ones. Violations involving minors under 16 automatically incur the $7,500 fine. Litigation rights differ as well: GDPR allows individuals to sue for any violation, whereas under CCPA, consumer lawsuits are limited to data breaches involving unencrypted or nonredacted personal data, with statutory damages ranging from $100 to $750 per incident. Notably, CCPA’s 30-day cure period for violations is no longer in effect.

For AI-specific compliance, CCPA has stricter requirements. Businesses using AI for significant decisions must conduct annual cybersecurity audits and privacy risk assessments, submitting certifications to the CPPA. Companies with over $100 million in revenue must file their first mandatory cybersecurity audit report by April 1, 2028. The California Privacy Protection Agency has emphasized:

The goal of a risk assessment is restricting or prohibiting processing activities with disproportionate risks.

In contrast, GDPR requires Data Protection Impact Assessments (DPIAs) for high-risk processing but does not demand the same level of AI-specific documentation. Additionally, businesses must respond to CCPA data requests within 45 days, compared to GDPR’s one-month deadline.

GDPR vs. CCPA Comparison Table

| Feature | GDPR | CCPA / CPRA |

|---|---|---|

| Consent Model | Opt-in (Explicit for most AI processing) | Opt-out (Stop sale/sharing rights) |

| Automated Decision-making | Right to object to profiling/ADM | Right to opt out of ADMT for "significant decisions" |

| Algorithmic Transparency | General transparency requirements | Specific right to access logic and individual outputs |

| Age of Consent | 16 (can be lowered to 13 by Member States) | 13–16 (Opt-in required); Under 13 (Parental opt-in) |

| Maximum Penalty | €20M or 4% of global turnover | $7,500 per intentional violation |

| Enforcement Authority | Data Protection Authorities (DPAs) | CA Privacy Protection Agency (CPPA) & Attorney General |

| Private Lawsuits | Broad (Any violation) | Limited (Data breaches only) |

| Response Time | One month | 45 days |

| AI Risk Assessments | Required for high-risk processing (DPIA) | Required for ADMT, sensitive data, and selling/sharing |

| Look-back Period | Not specified | 12 months |

Meeting GDPR Requirements for AI

Complying with GDPR when deploying AI systems means going beyond the basics of data protection. Businesses must ensure transparency, accountability, and fairness throughout the AI system's entire lifecycle - from its creation to its operation and ongoing evaluation.

Making AI Decisions Understandable

GDPR insists that AI decision-making processes be clear and easy to understand. This means offering explanations tailored to different audiences: detailed technical documentation for experts and straightforward summaries for users. For high-stakes decisions, such as loan approvals or hiring, include a "Rationale Explanation" that breaks down the technical logic, input data, and resulting outcomes. If the AI generates new personal data that will be stored for future use, individuals must be notified within one month. Additionally, the system should limit data collection to only what is essential, ensuring the process is both transparent and efficient.

Collecting Only What’s Necessary

Under GDPR, data collection must be adequate, relevant, and limited to the specific purpose. Start by mapping out your AI workflows to justify why each piece of data is needed. Use de-identification or anonymization strategies before training the AI. Move away from the "collect everything" mindset - delete temporary files promptly and keep an eye on API queries for unusual activity. As the ICO points out:

"The mere possibility that some data might be useful for a prediction is not by itself sufficient for the organisation to demonstrate that processing this data is necessary for building the model."

It's also important to distinguish between data needs for training and deployment. For instance, while using a system to fulfill a contract may be valid, this does not necessarily justify using personal data to train the model. Even AI-generated inferences about individuals are treated as personal data if they relate to an identifiable person. If sensitive data categories are involved, Article 9 requirements may apply. Beyond minimizing data collection, businesses must also focus on unbiased practices.

Human Oversight and Addressing Bias

GDPR requires human involvement in decisions that significantly impact individuals. This means reviewers must have real authority to override automated outcomes. Senior management should be trained, and regular Data Protection Impact Assessments (DPIAs) should be conducted to identify and address potential biases. It's essential to differentiate between allocative harms (denying access to resources or opportunities) and representational harms (perpetuating stereotypes). The ICO underscores this responsibility:

"You cannot delegate these issues to data scientists or engineering teams. Your senior management, including DPOs, are also accountable for understanding and addressing them."

User testing should confirm that privacy information is clear and understandable. Keep detailed records of design and deployment decisions to demonstrate accountability to regulators .

Meeting CCPA Requirements for AI

The California Consumer Privacy Act (CCPA) focuses on giving consumers more control over their data and ensuring transparency. For businesses using AI systems, this translates into creating systems that can handle consumer data requests and clearly explain how automated decisions are made.

Processing Consumer Data Requests

Under CCPA, California residents are entitled to three key rights that directly impact AI operations: the Right to Know, the Right to Delete, and the Right to Opt-Out. If a consumer asks what data you’ve collected, you must provide a thorough response. This includes details about how their data was used to train AI models and what conclusions were drawn from it. For instance, if a consumer inquires, “What data have you collected about me, and why?” a business might need to disclose that their geolocation data from a mobile app was used to train an AI system designed to optimize delivery routes.

Starting April 1, 2027, businesses using Automated Decision-Making Technology (ADMT) for major decisions - such as those related to finances, housing, education, employment, or healthcare - must notify consumers before use and allow them to opt out of AI-driven processes. Additionally, businesses must explain the "logic" behind their ADMT and how AI outputs influence decisions. Importantly, opting out must be as simple as opting in.

Deleting data from AI training models can be complex, often requiring the retraining of the entire model. To manage this, businesses should implement data lineage tracking to keep records of whose data was used and what predictions were generated. Also, privacy policies need to be updated and displayed on every page where personal data is collected - not just on the homepage.

| Requirement | Effective Date | What It Means for AI |

|---|---|---|

| Expanded Access Requests | January 1, 2026 | Consumers can request data beyond 12 months, including its use in AI training and inferences |

| ADMT Opt-Out & Access | April 1, 2027 | Consumers can opt out of AI decisions and access explanations about AI logic and outputs |

| Initial Risk Assessments | December 31, 2027 | Businesses must conduct assessments for high-risk AI activities already in use |

These responsibilities extend to handling data from minors as well.

Additional Rules for Children's Data

The CCPA enforces stricter rules when dealing with data from users under 16. For consumers aged 13 to 16, businesses must secure affirmative opt-in consent before selling or sharing their data. For children under 13, verifiable parental consent (VPC) is required. These requirements are especially critical when children’s data is used to train AI models for personalization, ad targeting, or behavioral profiling.

Under CCPA, personal information includes inferences used to create profiles. If an AI system deduces characteristics about a child - such as their interests or behavioral patterns - those inferences must be disclosed and can be subject to deletion upon request. Businesses should deploy reliable age-screening methods to identify minors accurately. For children under 13, parents must be directly notified about what data will be collected, how it will be used in AI training, and how they can give consent.

Violations of these rules can be costly. CCPA fines can reach $7,500 per violation, while breaches of the Children’s Online Privacy Protection Act (COPPA) can result in civil penalties up to $53,088 per violation. To reduce risks, businesses might consider using synthetic data (artificially generated datasets) or federated learning (training AI on decentralized data). For example, Google’s Android keyboard applies federated learning to make predictions, training AI without collecting individual user data centrally. These strategies highlight the importance of robust protocols when managing minors' data to ensure compliance with privacy laws.

sbb-itb-5f36581

Compliance Strategies for AI Systems

Creating an AI system that meets compliance standards requires careful planning throughout your data pipeline. It's crucial for leadership to take responsibility for AI privacy risks - this isn't something that can be left entirely to technical teams.

Designing Clear Data Flows

Start by documenting every machine learning process that interacts with consumer information. Maintain detailed audit trails for how data moves across systems, including when it's stored by third parties. For example, under CCPA, you must track data lineage to answer "Right to Know" requests, which require you to explain how specific consumer data was used to train your algorithms.

Limit data collection to what’s absolutely necessary for your AI’s purpose. Use de-identification techniques or privacy-enhancing technologies to protect sensitive information before extracting it. To minimize security risks, separate your machine learning development environments from the rest of your IT infrastructure by using virtual machines or containers. This approach can help prevent vulnerabilities like the one discovered in January 2019, when a flaw in the Python library "NumPy" allowed attackers to execute malicious code remotely by disguising it as training data.

Additionally, delete any intermediate files - like compressed transfer files - as soon as they’re no longer needed. This helps reduce your overall data footprint and lowers the risks associated with unnecessary file retention.

Testing for Bias and Keeping Records

Perform Data Protection Impact Assessments (DPIAs) to identify and address risks. Pay close attention to potential "allocative harms", such as unfair distribution of opportunities (e.g., jobs or credit), and "representational harms", which might reinforce stereotypes or harm dignity. Compare human and algorithmic accuracy side-by-side to justify the use of AI in your project.

Regularly monitor for concept drift, where changes in your target population's demographics or behaviors make your model less accurate or introduce bias over time. Conducting reviews can help you catch these shifts early, avoiding compliance issues. The ICO underscores this point:

Fairness means you should only process personal data in ways that people would reasonably expect and not use it in any way that could have unjustified adverse effects on them.

Keep thorough logs of all automated decisions. This includes documenting instances when individuals requested human intervention, contested a decision, or had a decision overturned. Under CCPA, even "inferences drawn" to create consumer profiles count as personal information. That means if your AI deduces characteristics about someone, you’re required to disclose those inferences and allow for their deletion upon request. Failure to comply with CCPA can result in fines of up to $7,500 per violation. These measures emphasize the importance of having well-trained staff and carefully evaluating vendors.

Training Staff and Evaluating Vendors

Human reviewers must have real authority - not just a symbolic role. The ICO highlights this clearly:

Human intervention should involve a review of the decision, which must be carried out by someone with the appropriate authority and capability to change that decision.

Train your staff to interpret AI outputs, understand the underlying system logic, and override automated decisions when needed. California regulations specifically require that human reviewers "must have authority to overturn the automated decision and must know how to interpret and use any outputs of the ADMT".

When working with AI vendors, demand transparency. California’s new rules mandate that businesses developing and selling Automated Decision-Making Technology provide customers with all necessary information to conduct required risk assessments. Your contracts should clearly define whether vendors act as controllers, processors, or joint controllers. Processors must also assist in responding to individual rights requests, such as access or data erasure.

Evaluate the security of third-party code and open-source libraries used by your vendors. Include procurement clauses that require vendors to disclose details about their model parameters, data sources, and evaluation metrics to support your internal risk assessments. Ensure that all training efforts are documented in your DPIAs to show that those involved in developing, testing, and monitoring AI systems have received proper data protection training.

| Training Focus | Target Group | Key Requirement |

|---|---|---|

| AI Governance | Senior Management/DPOs | Upskilling on fundamental rights and understanding risk appetite |

| Security & Code | Technical Specialists | Evaluating vulnerabilities in third-party code and open-source libraries |

| Decision Review | Human-in-the-loop Staff | Interpreting AI logic and having the authority to override automated decisions |

| Consumer Rights | Customer Support | Handling "Right to Know" and "Right to Delete" requests related to AI data |

| Vendor Oversight | Procurement/Legal | Identifying roles (controller/processor) and ensuring proper tracking of data lineage |

Privacy-Enhancing Technologies for AI

It’s possible to meet GDPR and CCPA requirements without compromising the effectiveness of AI systems. Privacy-enhancing technologies play a key role in reducing the risk of exposing real individuals' data during AI model development and deployment.

Using Synthetic Data for Training

Synthetic data is created algorithmically to reflect real-world patterns without relying on actual records. This makes it a valuable tool for training AI models while maintaining strong privacy protections.

When synthetic data is fully anonymized, it falls outside the scope of GDPR (Recital 26) and CCPA, as it no longer relates to identifiable individuals. However, simply generating synthetic data isn’t enough to ensure privacy. To provide stronger safeguards, additional measures are necessary.

For example, applying similarity and outlier filters can help identify and remove synthetic records that too closely resemble real data. Limiting the number of records for each individual before generating synthetic data also reduces the risk of exposure.

For even greater protection, synthetic data can be combined with differential privacy techniques.

Differential Privacy and Data Anonymization

Differential privacy (DP) works by introducing noise into data or model training processes. This ensures that the output remains consistent whether or not any single individual’s data is included. The principle behind DP is simple yet effective:

The outcome of a differentially private analysis or data release should be roughly the same whether or not any single individual's data was included in the input dataset.

This approach is particularly important in preventing model inversion attacks, where attackers can reconstruct sensitive data from training models. For instance, one such attack achieved 95% accuracy in reconstructing individuals' faces from training data. Differential privacy mitigates this risk by making it nearly impossible to reverse-engineer individual data points from model outputs.

A two-stage process can further enhance privacy: first, create a differentially private synthetic dataset using algorithms like DP-SGD, then use that dataset for machine learning tasks. This approach offers mathematical guarantees against re-identification while preserving the statistical properties needed for effective AI training.

To comply with GDPR standards, ensure your anonymization methods prevent "Singling Out", "Linkability", and "Inference". Regularly evaluate your techniques to stay ahead of emerging threats.

Conclusion

Following compliance guidelines not only strengthens customer trust but also protects your business from hefty legal expenses. As WilmerHale emphasizes:

GDPR compliance therefore must be a key consideration throughout the AI development lifecycle, starting from the very first stages.

Adopting Privacy by Design is a smart way to avoid costly pitfalls before they arise.

The financial risks tied to non-compliance are a strong incentive to act early. Ignoring these requirements could lead to expensive system overhauls if, for instance, a deletion request comes up. With California's new regulations on Automated Decision-Making Technology (ADMT) and mandatory risk assessments rolling out on January 1, 2026 - and the first annual certifications due by April 1, 2028 - businesses need to get ahead of the curve.

Proactive compliance isn’t just about avoiding penalties - it’s about reshaping your processes for long-term control and accountability. Start by conducting thorough risk assessments before engaging in high-risk AI activities. Build in meaningful human oversight for automated decisions, and establish data lineage tracking systems to streamline responses to individual rights requests. These steps aren’t mere formalities; they improve your operational transparency and reliability.

As the California Privacy Protection Agency (CPPA) states:

The goal of a risk assessment is restricting or prohibiting processing activities with disproportionate risks.

FAQs

What are the key differences between GDPR and CCPA when it comes to AI data privacy?

The GDPR, applicable across the EU, sets strict requirements for AI systems when it comes to data processing. These systems must operate on a lawful basis - whether through user consent or legitimate interests - and adhere to safeguards like privacy by design, pseudonymization, and encryption. Additionally, the GDPR places significant emphasis on regulating automated decision-making. This includes ensuring human oversight and conducting impact assessments to address potential risks.

In contrast, California's CCPA takes a different approach, focusing on consumer rights and transparency. It empowers individuals by granting them rights such as knowing what personal data has been collected, opting out of data sales or sharing, and requesting data deletion. While recent amendments to the CCPA include provisions for AI-related disclosures and audits, it does not demand preemptive measures like risk assessments or the level of human intervention required by the GDPR.

In summary, the GDPR leans toward proactive measures to safeguard privacy, while the CCPA emphasizes giving consumers control over their data after it has been collected.

How can businesses comply with AI-related data deletion requests under GDPR and CCPA?

To handle data deletion requests under GDPR (right to erasure) and CCPA (right to delete), businesses need a well-defined process in place. The first step is verifying the identity of the requester, as required by law, to ensure the request is legitimate. Once verified, locate and permanently delete all instances of the personal data. This includes data stored in training datasets, logs, and feature stores. If complete deletion isn’t technically possible, the data should be de-identified or aggregated to remove any personal identifiers. Additionally, notify third-party service providers or contractors to delete the data on their end as well.

Since AI models may retain patterns from the deleted data, businesses should adopt safeguards such as versioned data pipelines, retraining processes, and regular audits to monitor and address any lingering effects. It’s also essential to document every step - identity verification, data deletion, third-party notifications, and any exceptions - to ensure a thorough audit trail for compliance.

To streamline these requests, tools like Reform’s no-code form builder can be incredibly helpful. This tool enables businesses to create branded, multi-step forms for verified requests, automates routing to the appropriate teams, and logs outcomes for compliance reporting - all without requiring custom development.

How do privacy-enhancing technologies (PETs) support AI compliance with GDPR and CCPA?

Privacy-enhancing technologies (PETs) play a crucial role in helping organizations align their AI systems with major privacy laws like GDPR and CCPA. These tools support key principles such as data minimization, purpose limitation, and giving users more control over their personal information. Techniques like synthetic data generation, differential privacy, and de-identification allow businesses to train AI models effectively - without exposing sensitive data to the risk of re-identification.

Beyond safeguarding data, PETs bolster defenses against potential breaches and help meet compliance requirements, including CCPA's "right to know" and "right to delete" provisions. By offering practical, risk-based controls, these technologies enable companies to push boundaries in AI development while staying firmly within the limits of privacy regulations.

Related Blog Posts

Get new content delivered straight to your inbox

The Response

Updates on the Reform platform, insights on optimizing conversion rates, and tips to craft forms that convert.

Drive real results with form optimizations

Tested across hundreds of experiments, our strategies deliver a 215% lift in qualified leads for B2B and SaaS companies.

.webp)